Gradient Descent: How AI Learns and Why It Matters

When you hear about AI learning from data, what’s actually happening? At the heart of it is gradient descent, a mathematical method used to minimize error in machine learning models by iteratively adjusting parameters. Also known as steepest descent, it’s the quiet force behind every recommendation you get, every voice assistant that understands you, and every medical AI that spots tumors in scans. Without gradient descent, AI wouldn’t learn—it would just guess.

Think of it like finding the lowest point in a foggy valley. You can’t see the bottom, but you can feel which way the ground slopes. Gradient descent does exactly that: it checks the slope of the error curve, takes a small step downhill, and repeats until it finds the flattest spot—the point where the model’s predictions are as close as possible to reality. This process powers neural networks, computational systems inspired by the human brain that process data in layers to recognize patterns, and it’s also critical in optimization algorithms, tools that find the best solution among countless possibilities, from pricing models to logistics routes. You won’t see it, but it’s running every time your phone predicts the next word you’ll type or when a self-driving car decides when to brake.

What makes gradient descent powerful isn’t just math—it’s scalability. It works whether you’re training a model on a few hundred data points or millions of images. That’s why it’s the default choice in nearly every AI system today. But it’s not perfect. If the slope is too steep, the model overshoots. If it’s too flat, learning stalls. That’s why engineers tweak learning rates, use momentum, or switch to smarter variants like Adam or RMSprop. These aren’t magic—they’re just better ways to walk downhill.

What you’ll find in this collection isn’t a textbook on calculus. It’s real-world examples of how gradient descent shows up in places you didn’t expect. From AI in banking deciding who gets a loan, to nanotech drugs being optimized for precision delivery, to how climate models adjust to match real-world data—gradient descent is quietly making those systems smarter. You don’t need to code it to understand why it matters. You just need to know that every time AI gets better, it’s because someone figured out how to make it take a better step down the hill.

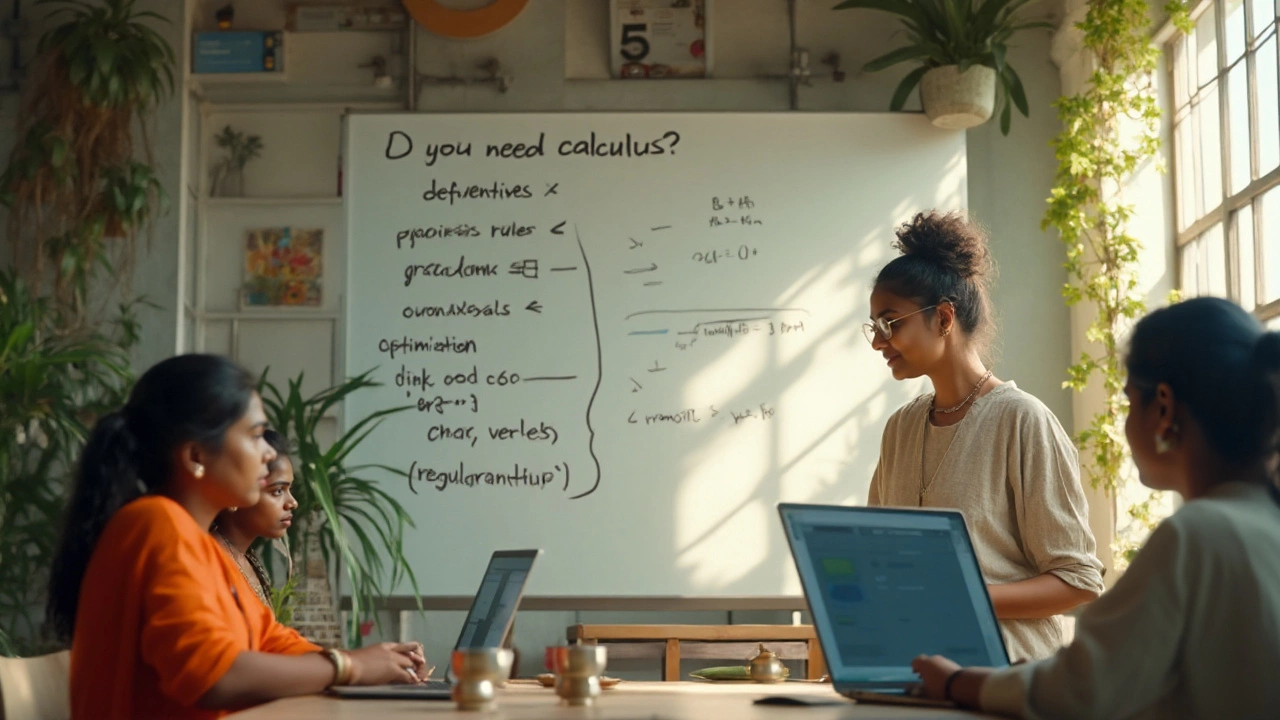

Does Data Science Require Calculus? What You Really Need in 2025

Sep, 7 2025

Do you need calculus for data science? Clear answer, where it matters, the exact topics to learn, and a simple path for analysts, ML engineers, and researchers in 2025.

Read Article→