Does Data Science Require Calculus? What You Really Need in 2025

Sep, 7 2025

Sep, 7 2025

Hot take: you can get hired in data science without calculus-but there’s a catch. If you’re doing analytics, dashboards, and most classical ML with off‑the‑shelf tools, you’ll be fine with strong stats and Python. If you’re building models, tuning training loops, or doing serious Bayesian work, calculus shows up fast. Here’s the clean answer, the minimal topics that matter, when you can skip it, and how to learn it without losing months.

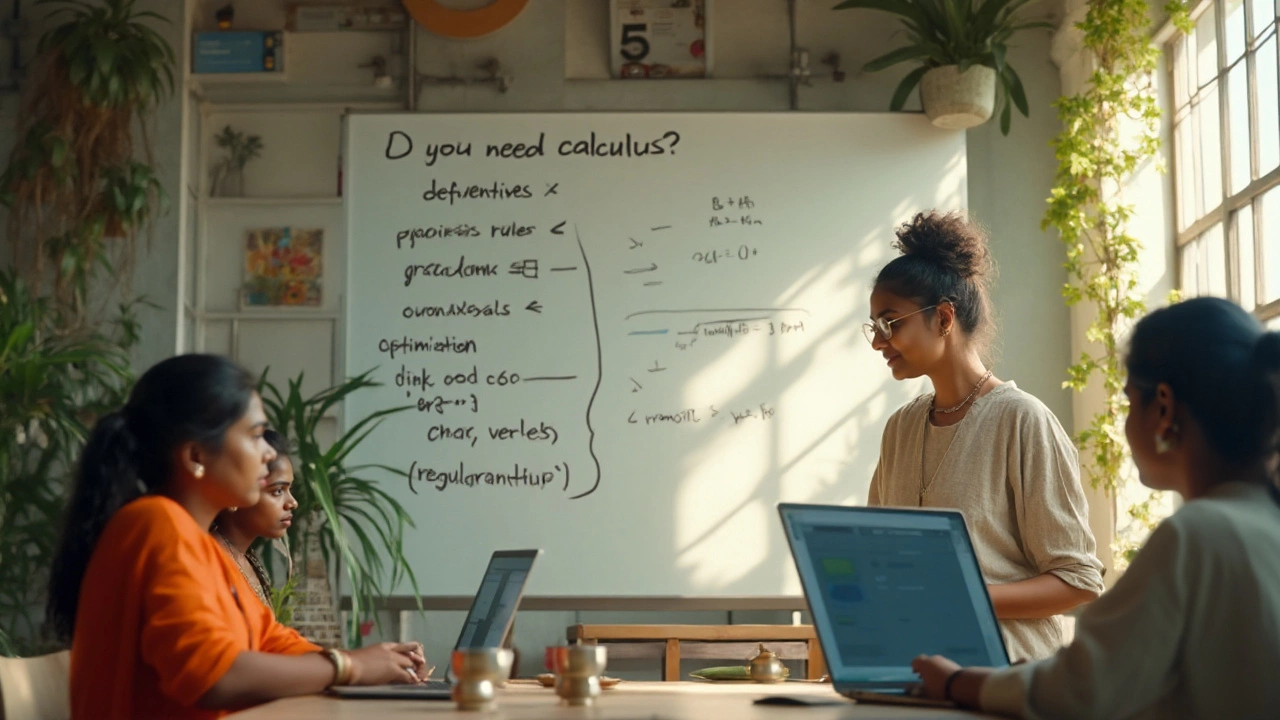

TL;DR: Do you need calculus for data science?

- Short answer: No for everyday analytics and applied ML; yes for model-building, advanced ML, and Bayesian methods.

- What to actually learn: derivatives (including partials), chain rule, gradients, basic optimization intuition, simple integrals for probability, and the idea of expectations.

- What matters more at first: statistics, probability, SQL, Python, and business context. Those ship value faster.

- Tools help: libraries use automatic differentiation, so you rarely hand-derive gradients. But knowing what a gradient is makes you 10x better at debugging and tuning.

- Roles split: analysts and data engineers can go far with little calculus; ML engineers and researchers need it. For LLM finetuning, light calculus helps; for training from scratch, you need more.

Where calculus shows up in data science (and where it doesn’t)

If you’re wondering whether calculus is gatekeeping or actually useful, here’s the no-spin view. Calculus matters when you optimize something continuous or reason about continuous probabilities. That’s the core: change and accumulation. If your day job is joins, metrics, and modeling with defaults, you’ll touch calculus concepts, but you won’t live in them.

calculus for data science shows up in a few recurring places:

- Gradient descent and friends: training linear/logistic regression, neural networks, and almost any model trained by minimizing a loss. You need derivatives, the chain rule, and gradients.

- Regularization: L2 is about squared norms; L1 has a kink (absolute value). Understanding their geometry-smooth vs. non-smooth-helps you set penalties and interpret results.

- Probability and expectations: integrals for continuous PDFs, normalizing constants, and expected values. Even if you don’t compute integrals by hand, you should know what an integral means.

- Kernels and Gaussian Processes: dot products sit in linear algebra, but kernel tricks and GP inference lean on calculus-heavy derivations.

- Bayesian methods: posterior = likelihood × prior / evidence. The “evidence” can be an integral that’s intractable, which is why we use MCMC or variational inference.

- Backpropagation: it’s just the chain rule organized efficiently. Autograd does it for you, but understanding the chain rule helps you pick activations, losses, and learning rates wisely.

Where calculus matters less:

- Business analytics and product data science: SQL, metrics, experimentation, causal reasoning, and clear storytelling beat calculus on most days.

- Feature engineering and classic ML with scikit-learn/XGBoost: you need data cleaning chops, leakage control, and validation skills more than hand-derived math.

- Data engineering: pipelines, warehousing, orchestration, and reliability. Think systems, not derivatives.

Here’s a quick role guide for 2025:

| Role | Calculus depth | Why |

|---|---|---|

| Business/Data Analyst | Minimal | Focus on SQL, dashboards, A/B tests, and metrics. Stats > calculus. |

| Applied Data Scientist (classical ML) | Light to moderate | Know gradients, losses, regularization. Libraries handle the rest. |

| ML Engineer (deep learning, LLM finetuning) | Moderate | Understand backprop, optimization, initialization, scheduling. |

| Research/Algorithm Engineer | High | Derivations, proofs, new methods, Bayesian inference details. |

| Data Engineer | Minimal | Systems, not continuous optimization. |

Why listen to this split? Because curricula and practice line up. The ACM Data Science Curriculum (2021) lists calculus as foundational but not the first stop. The U.S. National Academies’ “Data Science for Undergraduates” (2018; follow‑ups through 2020) emphasizes probability, modeling, and computing, with calculus supporting ML depth. University ML courses like Stanford’s CS229 (Andrew Ng, multivariate calculus throughout) and standard texts-Bishop’s Pattern Recognition and Machine Learning (2006) and Goodfellow et al.’s Deep Learning (2016)-lean on gradients, chain rule, and expectations. Modern frameworks (PyTorch 2.x, JAX) add automatic differentiation so you don’t do the algebra by hand; you still benefit from knowing what’s being computed.

2025 twist: GenAI changed how teams ship. You can build strong prototypes with retrieval-augmented generation, prompt pipelines, and low‑rank adaptations (LoRA) without heavy calculus. But if you venture into pretraining, RLHF/RLAIF, diffusion models, or uncertainty quantification, the calculus reappears.

A practical, no-drama learning path

You don’t need a semester-long detour. You need the right 20%. Here’s a tight path that covers what most practitioners actually use, plus an on‑ramp if you want to go deeper later.

Step-by-step plan

- Start with statistics and probability (2-4 weeks): descriptive stats, distributions, confidence intervals, hypothesis tests, A/B testing, Bayes rule. This gives you immediate wins on the job.

- Pick up linear algebra basics (2-3 weeks): vectors, matrices, dot product, matrix multiplication, norms, eigenvalues/eigenvectors intuition, SVD idea. You’ll see these in PCA, embeddings, and regularization.

- Add minimal calculus (2-3 weeks): limits (intuitively), derivatives (including partials), chain rule, gradient and directional derivative, simple definite integrals, expectation as a weighted sum/integral. Stop here unless you’re going deep.

- Connect to ML right away (ongoing): code logistic regression from scratch with gradient descent. Watch the loss go down, tune learning rate, add L2, and see what changes.

- Optional depth (4-8 weeks if needed): convex optimization basics (optimality conditions), Jacobians/Hessians intuition, multivariate change of variables (for probability), KL divergence and variational inference idea, and a taste of stochastic calculus only if you’re doing time‑series modeling at the research level.

The exact calculus topics that pay off

- Derivative basics: power rule, product rule, chain rule. For multivariate: partial derivatives and the gradient vector.

- Optimization intuition: local vs. global minima, learning rate trade‑offs, why curvature (Hessians) makes training harder or easier.

- Integrals for probability: continuous PDFs, CDFs, expectations as integrals, area under curves (ROC/AUC intuition is geometry without calculus arithmetic).

- Regularization geometry: L2 (smooth, spreads penalty), L1 (sparse solutions via a non-smooth corner).

Rules of thumb

- If you can explain gradient descent with arrows on a hill, you’re calculus‑competent for most applied work.

- If you can derive the gradient of a simple loss once, you’ll understand every training loop better.

- If you can state what an expectation is (weighted average with probabilities), you can handle most of Bayesian intuition used in practice.

- If you need MCMC or variational inference, plan for more calculus and measure-theory‑lite probability.

Decision guide

- Want to be a data analyst or product data scientist? Focus on stats, SQL, experimentation. Learn derivative intuition later.

- Doing applied ML with scikit‑learn/XGBoost? Learn gradients/chain rule and regularization. That’s enough to tune and debug.

- Building deep models or finetuning LLMs? Add multivariate calculus and optimization basics. Understand vanishing/exploding gradients and initialization.

- Research, probabilistic modeling, or building training algorithms? Commit to full multivariate calculus and some measure‑theoretic probability.

Tiny practice plan (hands-on, 3 evenings)

- Evening 1: Write a tiny gradient descent that fits y = ax + b to points. Plot loss vs. iterations. Change learning rates and watch what happens.

- Evening 2: Implement logistic regression with L2. Observe how regularization changes the weights and calibration.

- Evening 3: Sample from a normal distribution. Estimate expectations by averaging samples. Connect that to the integral definition of expectation.

Trusted sources to anchor your learning

- ACM Data Science Curriculum Guidelines (2021): places calculus as foundational but paired with computing and stats.

- National Academies (2018-2020), “Data Science for Undergraduates”: emphasizes probability/modeling; calculus supports ML depth.

- Stanford CS229 (Andrew Ng): multivariate calculus across logistic regression, SVMs, and EM.

- Goodfellow, Bengio, Courville (2016), Deep Learning: gradients/backprop and optimization, the calculus backbone.

- Bishop (2006), Pattern Recognition and Machine Learning: expectations, derivatives of log-likelihoods, and Bayesian calculus.

Cheat sheet: the minimum viable math kit

- Linear algebra: vector, matrix, dot product, norm, eigenvalue intuition.

- Calculus: derivative, chain rule, gradient, simple integrals, expectation.

- Probability/stats: distributions, conditional probability, CI, hypothesis testing, simple Bayesian updates.

- Computing: Python, NumPy, pandas, scikit‑learn, PyTorch/JAX basics.

FAQ, pitfalls, and next steps

FAQ

- Can I get a data science job with zero calculus? Yes-as an analyst or applied DS in many teams. You’ll need strong stats, SQL, and Python. As your work leans into ML, pick up calculus basics.

- Is statistics more important than calculus? For most roles, yes. Stats powers experimentation, metrics, and inference. Calculus powers optimization and some probability.

- Do LLMs and GenAI reduce the need for calculus? For building apps, yes a bit. For training, finetuning, and evaluating model behavior, calculus still matters.

- Do I need multivariable integrals and measure theory? Only if you’re in research or advanced Bayesian modeling. Most practitioners don’t go there.

- Will autograd make calculus knowledge obsolete? No. It hides algebra but not the ideas. You still choose losses, activations, and learning rates-and need to reason about gradients.

- How much calculus for time‑series? Minimal for forecasting with ARIMA/Prophet. More for state‑space models and continuous‑time stochastic processes.

- What about convex optimization? Helpful if you tune solvers or design losses. For day‑to‑day modeling, you only need the intuition.

Common pitfalls

- Overstudying before building: Months of calculus with no projects hurts momentum. Learn the small set, then code.

- Confusing memorization with intuition: Knowing 20 derivative rules won’t help if you can’t explain a gradient as a direction of steepest change.

- Ignoring probability: Many data questions are random, not deterministic. A good grasp of uncertainty beats raw calculus skill.

- Skipping validation: Even perfect math fails if you leak data or overfit. Split your data right, log experiments, and track metrics.

Pro tips

- Explain in pictures: draw loss surfaces, contour lines, and gradient arrows. It sticks.

- Differentiate once by hand: do it for MSE and logistic loss. That one exercise demystifies training.

- Use autograd to learn: write a model, then compare your hand-derived gradient with torch.autograd. You’ll spot gaps fast.

- Tune by principles: learning rate affects step size; momentum fights noise; weight decay is L2 regularization. Each has a simple calculus story.

Next steps by persona

- If you’re a career switcher (1-2 months plan): build SQL + Python + stats; ship two small projects (A/B test analysis and a churn model). Learn derivatives/chain rule alongside the churn model training.

- If you’re an analyst moving into ML (1 month): take your best dashboard metric and predict it with a simple model. Add L2 regularization. Learn gradients as you go.

- If you’re aiming at ML engineering (2-3 months): code logistic/linear regression from scratch; implement mini‑batch GD; read one chapter on optimization; build a small CNN or transformer finetune with PyTorch. Understand vanishing gradients and initialization.

- If you’re research‑curious (3-6 months): study multivariate calculus, convex analysis basics, variational inference, and MCMC. Reproduce one method from a paper.

Troubleshooting your learning

- Stuck on the chain rule? Compute a gradient numerically (finite differences) and compare with your analytic result. If they disagree, your algebra likely slipped.

- Loss not decreasing? Check your learning rate first, then data preprocessing (standardization), then gradient shapes. Plot the loss, don’t guess.

- Overfitting despite regularization? Increase weight decay, add dropout for deep nets, or get more data/augmentation. Revisit leakage checks.

- Bayesian code too slow? Use variational inference or smaller models; many “impossible integrals” are approximated anyway.

What to learn next (short list)

- Optimization: learn about learning-rate schedules, momentum, Adam vs. SGD trade‑offs, and when to use weight decay.

- Probability depth: KL divergence, cross‑entropy, and why they tie back to expectations and integrals.

- Model selection: cross‑validation, calibration, and uncertainty estimates. These make models useful in the real world.

The bottom line is simple: don’t let calculus stop you from starting, but don’t ignore it forever if you want to advance. Learn the small, focused set that shows up everywhere-derivatives, chain rule, gradients, simple integrals, and expectations-while you build projects that matter. You’ll feel the unlock the first time you fix a training issue by reasoning about the shape of your loss, not by random hyperparameter sweeps.